Information, Misinformation and Disinformation: Kristie Bunton on Elections, AI

This election cycle, TCU is presenting a series of events titled Elections, Democracy

and Social Values. The series aims to explore various viewpoints and aspects of an

election and engage TCU’s diverse community in voting as informed, responsible citizens.

This election cycle, TCU is presenting a series of events titled Elections, Democracy

and Social Values. The series aims to explore various viewpoints and aspects of an

election and engage TCU’s diverse community in voting as informed, responsible citizens.

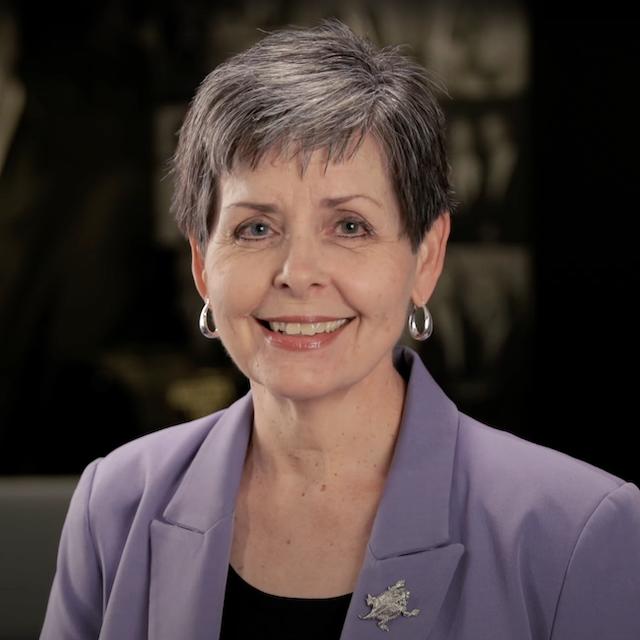

The second event in the series, Information, Misinformation and Disinformation in the Era of AI, will be moderated by Kristie Bunton, dean of the Bob Schieffer College of Communication. Ahead of the event, Bunton answered a few questions on the topic at hand for TCU News.

The title of the upcoming event includes the terms “information, misinformation and

disinformation.” Let’s break those down. To create a baseline, what is information?

Generally, we think of information as facts, evidence and details that we may use

to make decisions, such as where and when we can go to the polls to vote in an election.

But factually accurate information may also be contextually inaccurate. If a journalist’s

news report calls a candidate “Kamala Harris” or “Vice President Harris” or “Ms. Harris”

or simply “Kamala,” those are all factually accurate pieces of information about the

Democratic nominee for president. But choosing to use one description and not another

may create or perpetuate a bias that affects the attitudes or behaviors of the audience

who receives that information.

What is misinformation?

We usually think of misinformation as incorrect information. Misinformation is false. The

reason for the misinformation may simply be that someone made a mistake. If a journalist

types the wrong date or times the polls will be open, that journalist likely didn’t

intend to communicate false information. But that journalist’s error – misinformation

– can lead us to make a bad decision or create a bad consequence. The journalist who

made the error didn’t mean to keep me from voting, but because the journalist posted

the wrong time or date in the election guide the news organization distributed, for

instance, I went to the polls too late on the evening of Election Day and found them

closed, so I didn’t get to vote.

What is disinformation? And how does it differ from misinformation?

Disinformation is intended to create a harmful consequence. Disinformation is false

or wrong information communicated by people or institutions who want to harm us in

some way. Creators and distributors of disinformation mean to negatively affect those

who receive that disinformation. I think disinformation and propaganda are related

but different. Both disinformation and propaganda want to influence behaviors and

attitudes, but disinformation wants to create damage. For example, recently, when

the U.S. Department of Justice shut down several websites, it did so because the officials

concluded that the Russian government was using Kremlin resources to build those websites

to communicate disinformation that would influence the U.S. presidential election

for the benefit of Russia.

This is the first presidential election where artificial intelligence has been at

the forefront. Can you speak to what we are seeing?

This is the first presidential election where artificial intelligence has been at

the forefront. Can you speak to what we are seeing?

I think some voters are worried that AI-generated information cannot be trusted when

it comes to elections. So the same people who are happily using AI tools to generate

information they use in their work lives, for instance, are worried that they can’t

trust AI tools to provide accurate information to tell them about candidates’ stances

on issues. And their worry may be justified, given what we know about how quickly

generative AI tools can learn from their mistakes and create yet more messaging.

A few weeks ago, someone used fake AI-generated imagery of Taylor Swift that seemed to suggest the singer was endorsing former President Donald Trump. Some of her fans immediately said the Trump campaign was spreading misinformation. But was it misinformation – an honest mistake? Or was it disinformation – intended to mislead voters who might be swayed by an endorsement from Swift, who is arguably the single most influential entertainer in the world right now? What makes any of these kinds of misinformation or disinformation ethically troubling is the speed with which AI tools can generate and disseminate these messages.

As a consumer, how can I best be an informed voter and responsible citizen, given

these challenges?

We have more access to more information, misinformation and disinformation than ever

before. There’s no shortage of messaging. Given this confusing and overloaded landscape,

I think the onus is on journalists to constantly fact-check the sources of information

and the accuracy of it, as many national journalists did over recent days as allegations

were raised through fake social media posts that in an Ohio town immigrants from Haiti

were eating their neighbors’ pets.

The onus will also be on voters more than ever to check, double-check and triple-check the sources of the information they consume. I hope our panelists will be able to offer ideas about how we can all do that.

Elections, Democracy and Social Values: Information, Misinformation and Disinformation in the Era of AI will be held Sept. 25. Panelists will include Adam Schiffer, political science professor; Amber Phillips ’08, a journalist from the Washington Post; and Richard Escobedo ’16, a producer with Face the Nation.

For more on Elections, Democracy and Social Values, read Betting on Democracy: Grant Ferguson Discusses Political Predictive Markets.